Local Kubernetes installation

This page is in active development, content may be inaccurate and incomplete.

The following will describe a local

Kubernetes installation via minikube. The

files required are in examples/kubernetes

minikube start --cpus 2 --memory 4096 --driver=docker

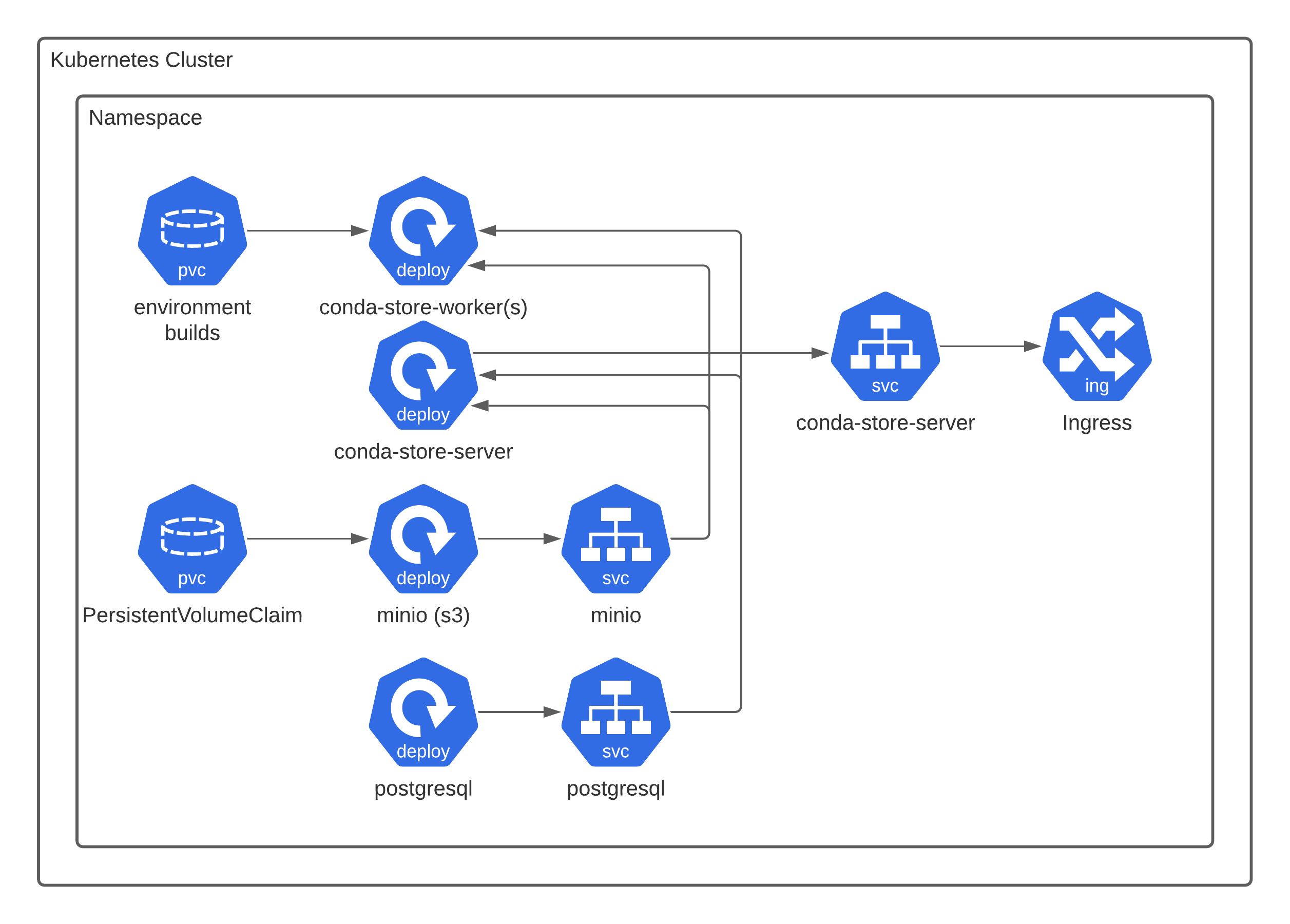

Now we deploy the conda-store components. Note that conda-store is

compatible with any general s3 like provider and any general database

via SQLAlchemy. Currently the docker image is build with support for

PostgreSQL and SQLite. Consult the SQLAlchemy

documentation

on supporting your given database and then creating a custom docker

image with your required database. Not all database engines were added

to save on image size. Additionally You may not need to use MinIO and

PostgreSQL deployments and use existing infrastructure. In the case of

AWS this may mean using Amazon RDS and

s3. Consult your cloud provider for

compatible services. In general if it is supported by SQLAlchemy and

there is a s3 compatible object store conda-store will

work. kustomize is

being used for the deployment which is part to the Kubernetes project

itself.

kubectl apply -k examples/kubernetes

Make sure to change all the usernames and passwords for the deployment.

If your installation worked you should be able to port forward the conda-store web server.

kubectl port-forward service/conda-store-server 8080:8080

Then visit via your web browser http://localhost:8080

To enable viewing logs, make sure to also port forward the minio service

kubectl port-forward service/minio 30900:9000

For additional configuration options see the reference guide

A good test that conda-store is functioning properly is to apply the

jupyterlab-conda-store pod as a quick test. It will cause

conda-store to build an environment with JupyterLab and NumPy. This

pod is not needed for running conda-store.

kubectl apply -f examples/kubernetes/test/jupyterlab-conda-store.yaml

If you instead mount a

ReadWriteMany

volume to the container conda-store-worker like nfs or

Amazon EFS. You can mount the environments

built via conda-store and use environments this way. Note that NFS can

be significantly slower when it comes to creating environments (see explanation about performance).